Projects

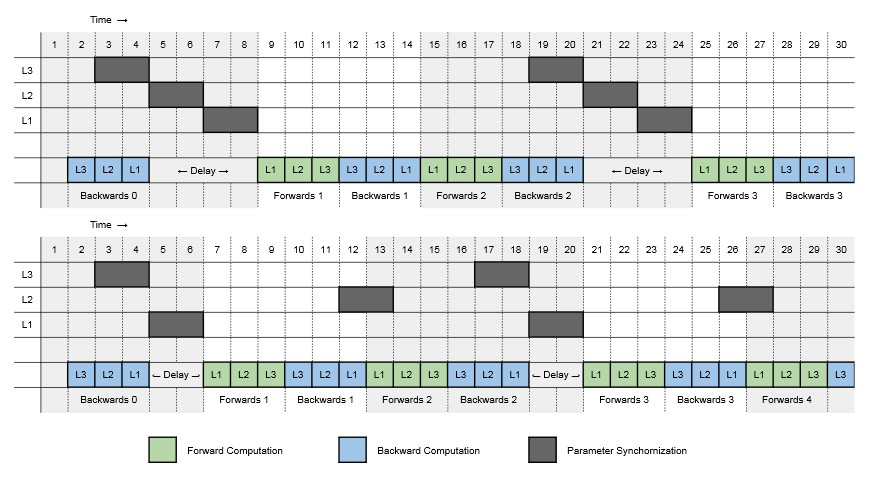

Communication Efficient Distributed SGD

In my final semester at Michigan I took a course called Systems for AI where we studied state-of-the-art software systems for artificial intelligence. Topics included systems for deep learning, machine learning, and reinforcement learning; runtime execution and compilers for AI; distributed and federated learning systems; serving systems and inference; and scheduling and resource management in AI clusters.

For the final project in the course, my teammate and I studied methods for speeding up distributed SGD training. Additionally, the course held a competition for the fastest code to reach 90% accuracy on the SVHN datasets on a 3 node CPU cluster; my implementation took first place.

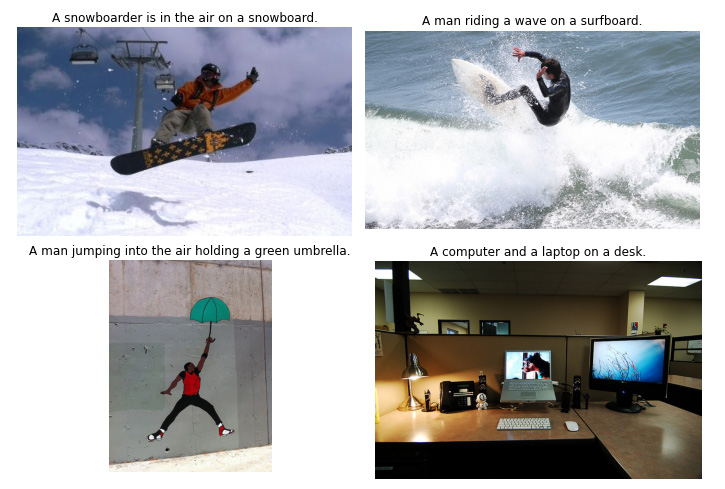

Read MoreImage Captioning with Vision Transformers and GPT-2

Transformer architectures rapidly overtook RNNs as the state-of-the-art model of choice in NLP. In late 2020, transformers started to gain popularity among computer vision researchers; Google Brain released ViT, a visual transformer model that achieves state-of-the-art performance on image classification. I fine-tuned an encoder-decoder transformer using a ViT encoder and GPT-2 decoder for image captioning.

Try the CodeStatic Branch Prediction for LLVM IRs Using Machine Learning

In my compilers class at Michigan, my project team and I trained both classical and deep-learning models to predict the bias of an assembly branch instruction. Compilers rely on accurate branch prediction to make intelligent decisions when optimizing code. Our best model performs better than LLVM's internal branch predictor.

Read MoreNatural Language Inference

For the final project in my natural language processing class at Michigan, each project team was tasked with solving three natural language inference (NLI) benchmarks:

CommonsenseQA—given a common-sense question, choose the correct answer out of 5 multiple-choice options

Conversational Entailment—given a short dialogue between speakers, determine if a given hypothesis can be inferred from the dialog.

Everyday Actions Text (EAT)—determine if a short story is plausible, and if it isn't, determine when the story stops making sense.

Natural language inference is a notoriously difficult problem that requires machines to reason about the meanings of language. Deep-learning has been a huge breakthrough in NLP research and has advanced the state-of-the-art for NLI. My group and I used large transformer models on these benchmarks and—out of twenty-five teams in the class—performed fifth best on the Conversational Entailment benchmark and third best on the EAT benchmark.

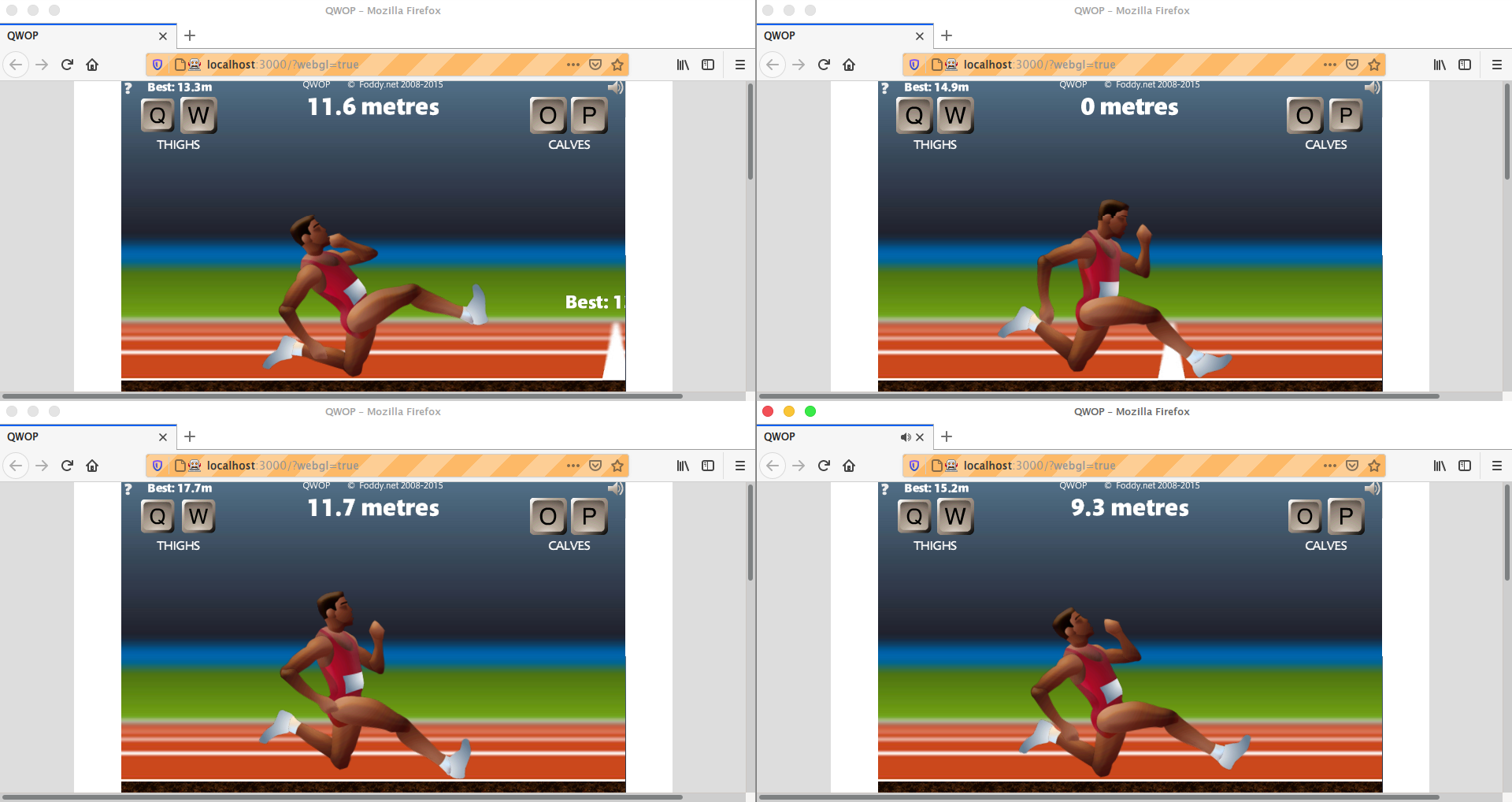

Read More Try the CodeUsing RL to beat QWOP

A friend of mine is really good at QWOP: a video game where you control a sprinter's legs to run a 100 meter dash. He is so good that he's ranked within the top ten globally. I wanted to beat him—but I'm terrible at the game. I decided the only way to win was to resort to reinforcement learning.

I trained my computer to beat the game using an algorithm called Proximal Policy Optimization. My computer can beat the game, but it isn't faster than my friend.

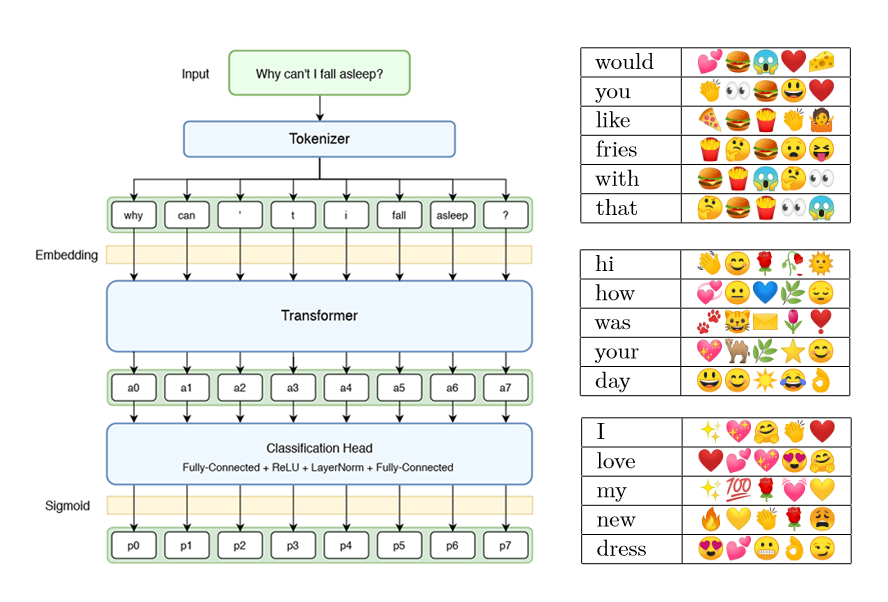

Try the CodeEmoji-AI: Learning to Predict Emojis from 1.8 Million Tweets

In my second semester at Michigan, I took a special topics class called Applied Machine Learning for Affective Computing. In the class we used machine learning techniques to process natural language, recognize speech, and understand and predict human emotions. What better encapsulates the full range of human emotion than emojis? For my final project I trained a model to predict what emojis should be used in a natural language input.

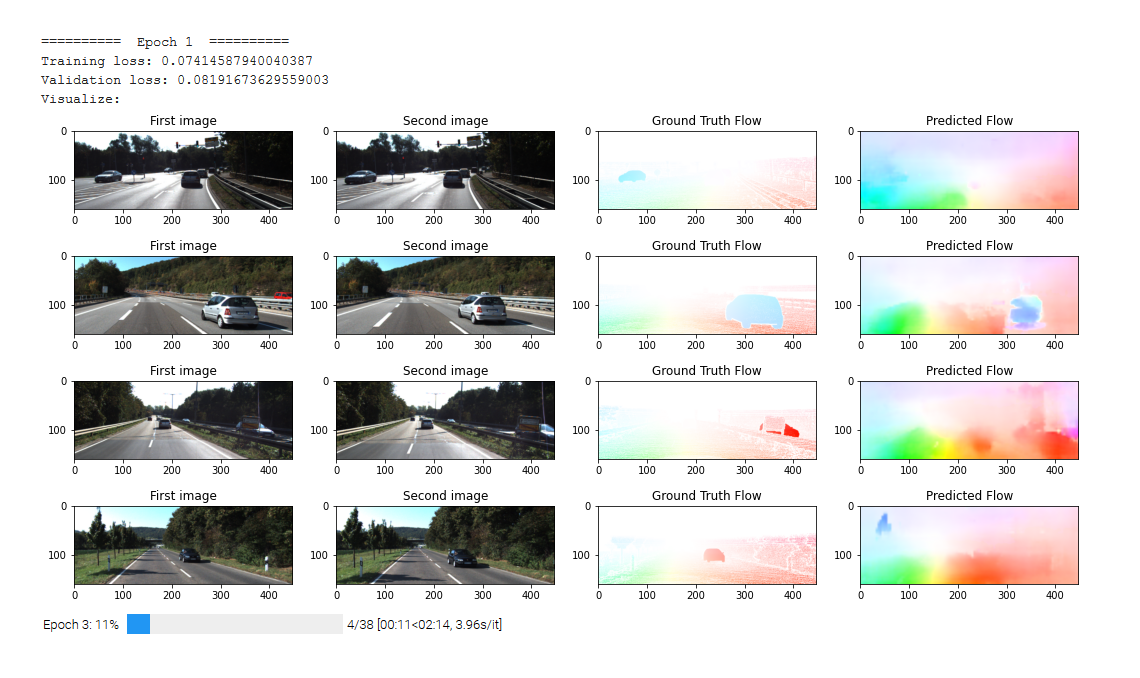

Read More Try the CodeOptical Flow Estimation using PWC‑Net

Optical flow estimation is the process of estimating pixel-wise motion between consecutively captured images. For the final project in my computer vision class, my group and I implemented and trained a large deep-learning model to estimate optical flow in images captured during every-day driving scenarios.

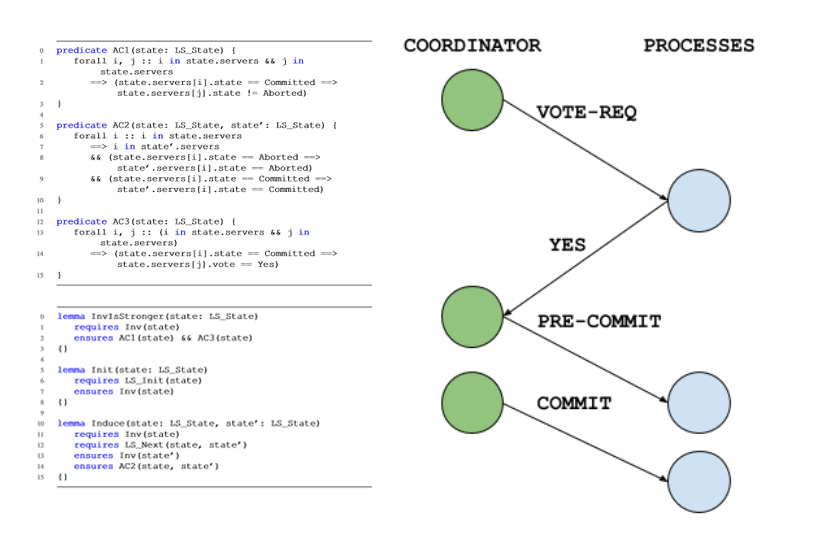

Read More Try the Code2.5-PC: A Faster and Non-Blocking Atomic Commit Protocol

Atomic commitment is a fundamental problem in distributed systems. There are two popular protocols for atomic commitment: 2 phase commit (2PC) and 3 phase commit (3PC). 2PC is faster than 3PC, however, its progress can be blocked under certain failure scenarios. For the final project in my distributed systems course, my group and I invented 2.5 phase commit, an atomic commit protocol that is non-blocking like 3PC but only requires the same number of message passing round trips as 2PC.

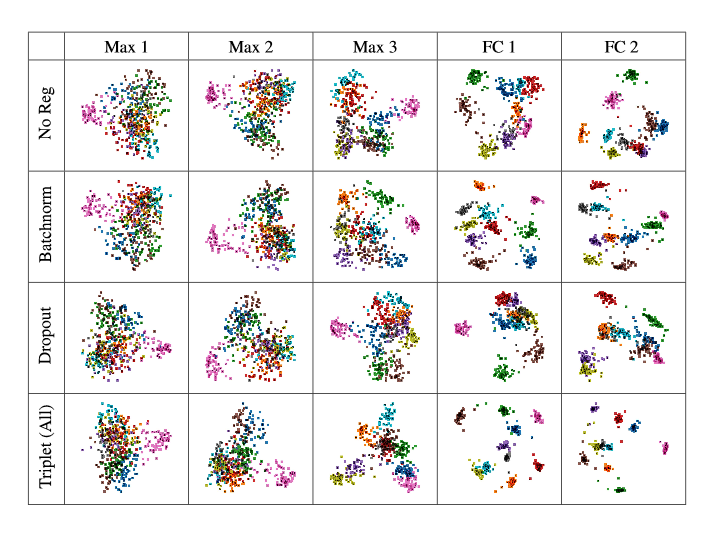

Read MoreTriplet Loss for Regularizing Deep Neural Networks

I took a machine learning course my first semester at Michigan. In the class we covered the theoretical and mathematical foundations of several machine learning algorithm. For the final project in the course, my project group and I investigated a novel method for regularizing neural nets that applies a triplet loss term at intermediate hidden layers. The regularization term induces large margins in the hidden states of a neural-net. We found that our method improved both generalization performance and robustness to adversarial examples on the MNIST and CIFAR-10 datasets. We presented our method at a poster session featuring other projects from the course and our project won first prize for the open-ended project track.

Read MoreDeep.ditch: Computer Vision for Automated Road Damage Detection

I became interested in machine learning as a junior in college. I asked one of my professors and mentors (Dr. Shiyong Lu) if he knew of any interesting projects I could work on to learn more about the topic. He pointed me towards a vision competition hosted by a workshop at the 2018 IEEE Conference on Big Data.

The goal of the competition was to detect and localize instances of road damage in images. I taught myself deep-learning and PyTorch, trained my model, wrote a report, and submitted my results. I placed 10th overall in the competition and my report was accepted by the workshop's reviewers (not bad for an undergrad working alone). Unfortunately, I was unable to attend the conference and my report went unpublished, but hey, I was happy it got accepted.

My senior year, I lead a capstone project team that deployed my model to an iOS app. The model runs locally on device and is used to detect and report road damage a remote service.

Read More